Discussions

Community Video Share -- Ollama,, Langchain, and D-ID a match made in Heaven - Fast!

over 1 year ago by mccormackjim3

A quick share with the community members working with the API.... kind of incredible to think how far we have come and how fast things are moving... a lot of great projects in this weird virtuous mix...

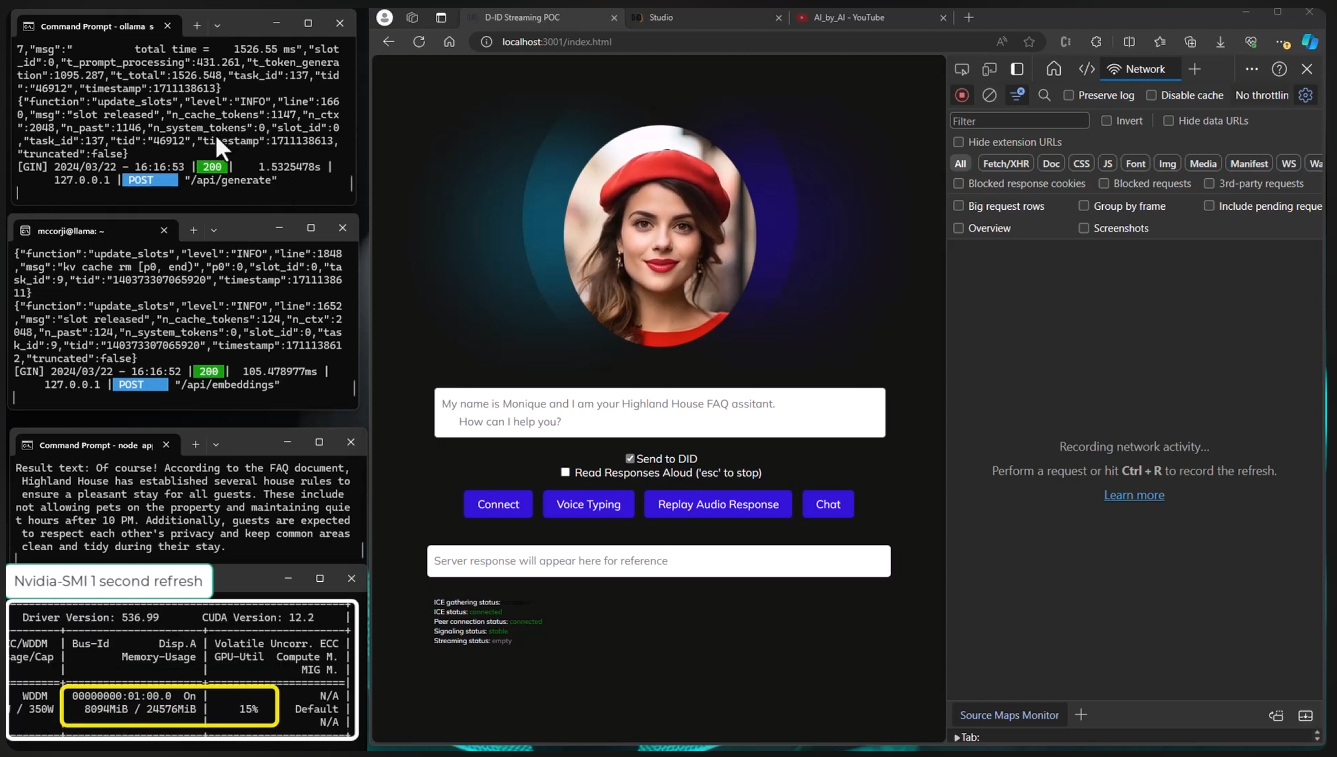

This is a D-ID Talking Avatar answering FAQ questions. The twist is that its hosted local in 8GB or VRAM locally on a retail grade medium duty Windows PC using opensource. Pretty snappy - about 2 sec stream response on the basic queries! The Latency is the Langchain/LLM answer generation - I need a better PC :)

TLDR: Langchain retrieval (RAG) with a quantized Local LLama2 7b model and Nomic Embeddings model with both on Ollama.

I'll share the code link over on the D-ID Discord for my GitHub Repo for those wanting to try it...