Discussions

Inquiry on Real-time Video Streaming with OpenAI GPT and D-ID API Integration

class DidAgent:

def **init**(self):

self.did_api_key = os.getenv("DID_API_KEY")

if not self.did_api_key:

raise ValueError("DID_API_KEY가 없습니다. .env 파일을 확인하세요.")

self.encoded_did_api_key = "Basic " + base64.b64encode(self.did_api_key.encode()).decode("utf-8")

# API URL과 헤더 설정

self.api_url = "https://api.d-id.com/talks/streams"

self.headers = {

"Authorization": self.encoded_did_api_key,

"Content-Type": "application/json",

"Accept": "application/json"

}

print("[DEBUG] Authorization Header:", self.headers["Authorization"])

# 캐릭터별 음성 및 스타일 설정

self.character_config = {

"Facilitator": {

"voice": "ko-KR-SunyeongNeural",

"style": "friendly",

"image_url": "https://i.ibb.co/Db46WnT/F.png"

},

"Student A": {

"voice": "ko-KR-HyunjunNeural",

"style": "neutral",

"image_url": "https://i.ibb.co/zxj1f5H/A.png"

},

"Student B": {

"voice": "ko-KR-InJoonNeural",

"style": "enthusiastic",

"image_url": "https://i.ibb.co/ZLCSgMR/B.png"

},

"Student C": {

"voice": "ko-KR-JiMinNeural",

"style": "calm",

"image_url": "https://i.ibb.co/vkM0QQj/C.png"

}

}

def create_did_animation(self, character_name, text):

# 캐릭터 설정 가져오기

config = self.character_config.get(character_name)

if not config:

print(f"[ERROR] 캐릭터 {character_name}에 대한 설정이 없습니다.")

return None, None, None

# 요청 데이터 설정

payload = {

"source_url": config["image_url"],

"script": {

"type": "text",

"input": text

},

"config": {

"voice": config["voice"],

"style": config["style"]

}

}

# API 요청 보내기

response = requests.post(self.api_url, headers=self.headers, json=payload)

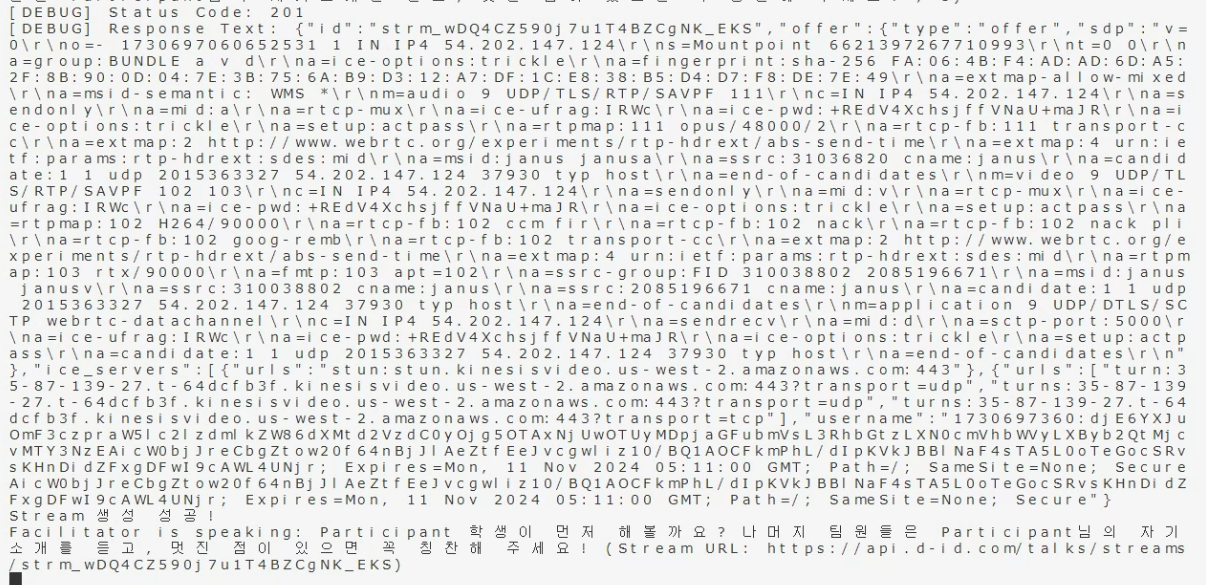

print("[DEBUG] Status Code:", response.status_code)

print("[DEBUG] Response Text:", response.text)

# 응답 확인

if response.status_code == 201:

response_data = response.json()

stream_id = response_data.get("id")

session_id = response_data.get("session_id")

sdp_offer = response_data.get("offer", {}).get("sdp")

print("Stream 생성 성공!")

return stream_id, session_id, sdp_offer

else:

print(f"[ERROR] Failed to create animation for {character_name}: {response.text}")

return None, None, None

def send_sdp_answer(self, stream_id, session_id, sdp_answer):

# URL 설정

url = f"{self.api_url}/{stream_id}/sdp"

# 요청 데이터 설정

payload = {

"answer": {"type": "answer", "sdp": sdp_answer},

"session_id": session_id

}

# API 요청 보내기

response = requests.post(url, headers=self.headers, json=payload)

if response.status_code == 200:

print("[INFO] SDP answer sent successfully")

return True

else:

print(f"[ERROR] Failed to send SDP answer: {response.text}")

return False

Hello,

I am working on a project where I am trying to integrate OpenAI's GPT API with D-ID's API to create real-time interactive video streaming. The goal is to convert GPT-generated text responses into animated videos using D-ID's API and stream these videos live to users.

Project Overview:

Text Generation: Generate conversational text responses using the OpenAI GPT API.

Animation & Streaming: Send these responses to the D-ID API to generate animated videos and stream them live to the user.

Current Implementation:

The backend, built with Flask, processes responses generated by GPT.

The backend then sends these responses to the D-ID API, receives a streaming URL and SDP (Session Description Protocol), and passes them to the client.

The frontend, built with React, sets up a WebRTC connection to play the video stream in real-time for each response.

Issue:

While the API successfully returns a "stream URL" link, the video does not play as expected on the frontend.

This raises the question: Is there something incorrect in the way the video stream is being generated? Are there specific steps or configurations needed in D-ID's API to ensure the generated video stream functions correctly?

Request for Assistance:

Please advise if there are particular requirements or settings in the D-ID API to ensure the generated video streams are playable and display correctly on the frontend.

If there are any recommended methods or steps for reliably integrating real-time video streams using OpenAI GPT-generated text and D-ID's animation capabilities, I would appreciate your guidance.

Thank you for your support in making this project successful.